Article Review

Noam Chomsky has garnered celebrity status for his activism and for his positions on various political issues. A side effect of this prominence seems to have been a relative waning in public attention to the fact that, for the first several decades of his career, he was known as the leading figure in the field of linguistics.

Chomsky’s notions of universal- and generative grammar wrought a paradigm shift in linguistics, in particular where it overlaps with psychology in the fields of psycholinguistics and biolinguistics. It also had an enormous impact on cognitive science, neuroscience, and computer science – contributing through them to the development of artificial intelligence.

Given Chomsky’s place in intellectual history, his article, “The False Promise of ChatGPT”, published last year in the New York Times, is a remarkable document.

Firstly, some background. Chomskyan linguistics seeks to explain the fact that human beings are capable of acquiring, on the basis of a limited set of data, a natural language that enables them to utter and understand an unlimited number of sentences. Chomsky posited that this is an innate human capacity – a thesis that had been implicit in the work of past linguists, but which he was the first to make explicit and theorize in the English-speaking world. In Chomsky’s view, human language is shaped by a set of innate principles – a “universal grammar” that underlies all existing natural languages (Cowie, 2017).

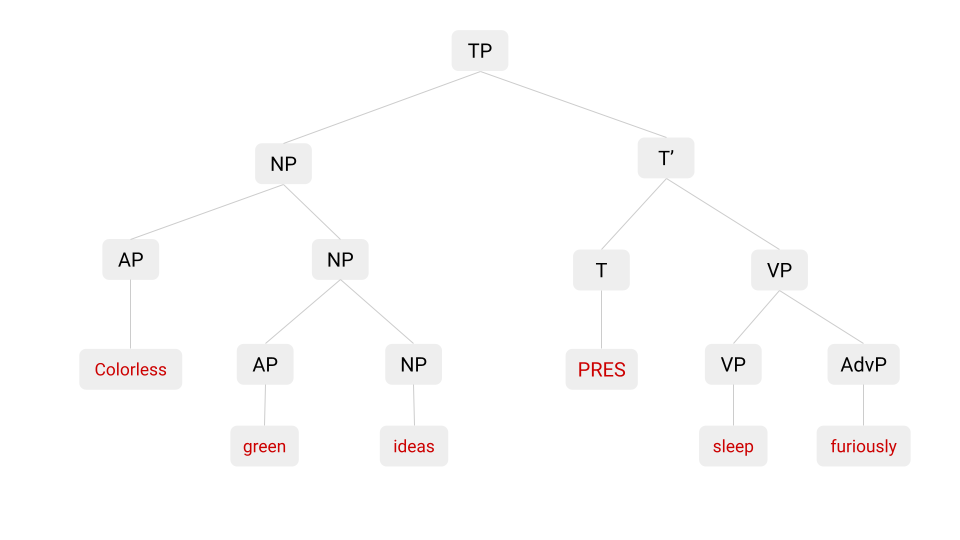

Based on this premise, Chomsky defined a “generative grammar” as a set of rules for a given language that aims to model the unconscious syntactic knowledge of a speaker of that language – the knowledge that allows a speaker, through recursion, to both utter and interpret a potentially infinite number of grammatically correct sentences. Chomsky’s generative grammar, and the way in which it models natural languages, have again been hugely influential – many generations of students have encountered one form or another of a syntax tree inspired in Chomsky’s generative grammar.

Going back to the Times’ article, in it Chomsky claims there are at least three ways in which AI technology falls short of its promise: linguistic, epistemic, and ethical.

In linguistic terms, Chomsky contrasts the statistical handling of language by AI with the way in which humans acquire and use language. While machines require notoriously vast amounts of data to draw statistical inferences, “a young child acquiring a language is developing — unconsciously, automatically and speedily from minuscule data — a grammar, a stupendously sophisticated system of logical principles and parameters”.

Human beings are innately able to acquire a wealth of insight and learnings from a paucity of data – as opposed to machines, which, as it will be argued below, achieve a paucity of insight despite the vast wealth of data now made available to them. In this regard, Chomsky says, in an allusion to his own notion of universal grammar, “[t]he child’s operating system is completely different from that of a machine learning program”.

Furthermore, Chomsky argues, critical thinking – the hallmark of true science – is not just describing what was and is the case or predicting what will be the case. It is also to be able to conceive, and distinguish, what is not the case and what could and could not be the case: what doesn’t exist, what is possible, and what is impossible.

Description and prediction, Chomsky says, are the crux of machine thinking. Machine learning systems like ChatGPT memorize unlimited amounts of data and, through brute statistical force, infer correlations between data points. But they do not generate explanations, because they do not distinguish between what is possible and what is impossible. A machine learning system can “learn” both that the earth is round and that the earth is flat, as “they trade merely in probabilities that change over time”.

Finally, Chomsky contends that, despite constant efforts by their creators to set guardrails against ethically objectionable content, AI systems suffer from “the moral indifference born of unintelligence”:

ChatGPT exhibits something like the banality of evil: plagiarism and apathy and obviation. It summarizes the standard arguments in the literature by a kind of super-autocomplete, refuses to take a stand on anything, pleads not merely ignorance but lack of intelligence and ultimately offers a “just following orders” defense, shifting responsibility to its creators.

This is an explicit reference to Hannah Arendt – a connection which Chomsky is not the only one to have made. Here, in the final strand of his argument, Chomsky is speaking not from his position as a linguist but from that of a moral philosopher.

This is a very strong stance to take, particularly coming from someone whose work has defined to no small extent the intellectual landscape of our time, as well as its technological consequences. Which is what makes this article, whatever one’s views on AI may be, such a meaningful read.

In this article:

Cowie, F. (2017). Innateness and Language, in The Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/archives/fall2017/entries/innateness-language

Kaspersen, A., Leins, K., & Wallach, W. (2023). Are We Automating the Banality and Radicality of Evil? Carnegie Council for Ethics in International Affairs. https://www.carnegiecouncil.org/media/article/automating-the-banality-and-radicality-of-evil